My Machine Learning Journey

Introduction

Before February 2017, I was intrigued by Machine Learning but didn’t understand how it worked. This post details my journey from that initial curiosity to becoming proficient in Machine Learning, highlighting key milestones, projects, and the lessons I learned along the way.

Early Days of Exploration

As they say, “Any sufficiently advanced technology is indistinguishable from magic”. Machine Learning seemed like magic when I heard about it. The turning point came when I prepared to attend a presentation by Hugo Larochelle in Montreal. Determined to grasp the basics before the presentation, I spent a weekend reading and trying to understand Neural Networks.

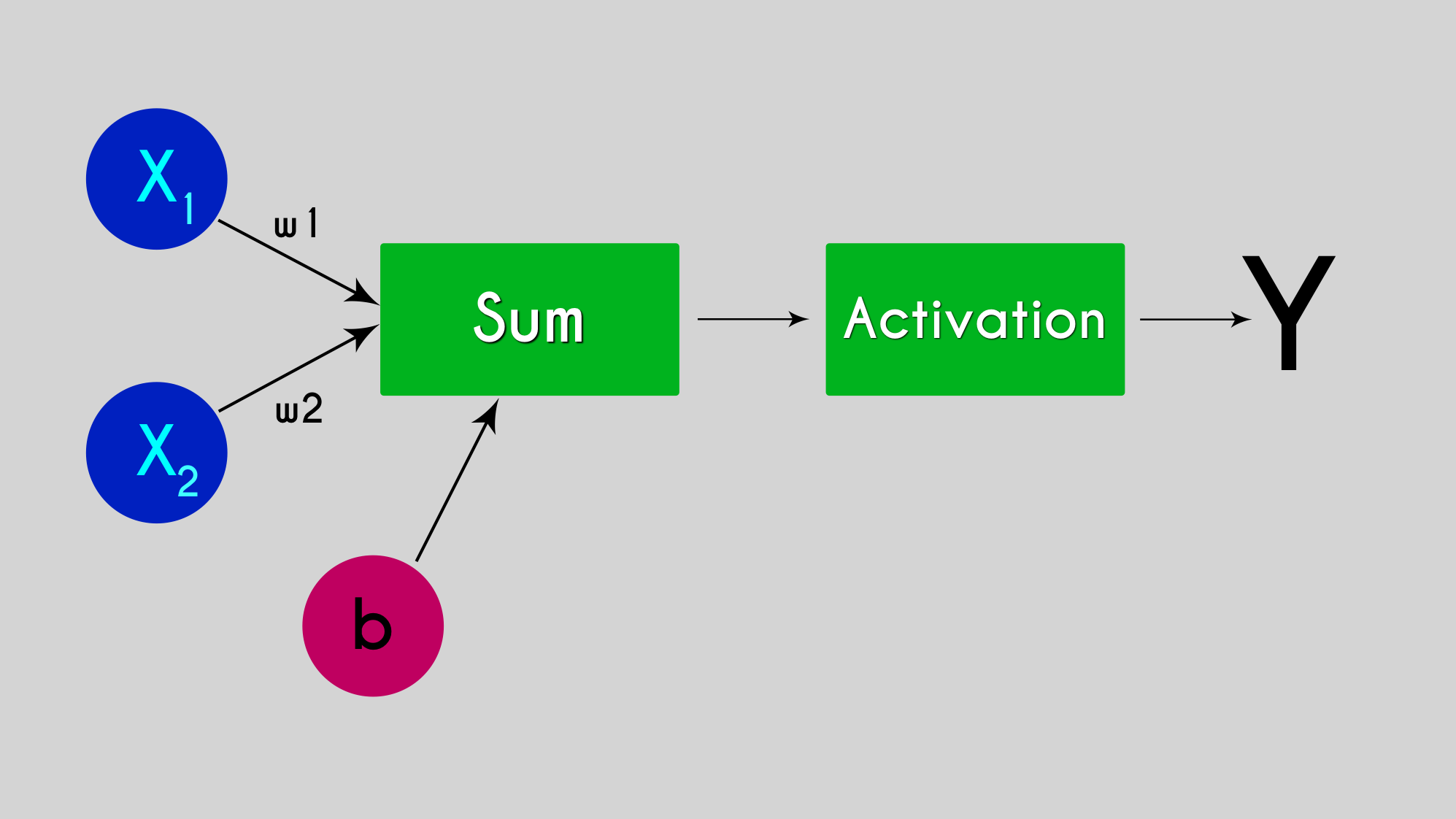

The first “Eureka” moment came when I realized that the learning process in Neural Networks involved learning values for weights in relatively simple equations like Y = wX + b and also by starting to grasp the concept of Gradient Descent.

I realized that a lot of Math was involved and that's a good thing for me. I love Math. However, my overall understanding was still fuzzy.

First Courses

Stanford University - Machine Learning with Andrew Ng (March - May 2018)

Andrew Ng is the kind of professor everyone wishes they had. Important concepts like Backpropagation and Gradient Descent were explained by building an intuition before doing implementations by ourselves. This course laid a solid foundation for my understanding of Machine Learning.

DeepLearning.AI - Deep Learning Specialization (May - August 2018)

This specialization covered all the basics of Neural Networks for classification, regression and the specific model architectures for Computer Vision and Natural Language.

It also included a comprehensive course on hyper-parameters and their application in real projects, which showed how to progress in generating a model that will work in production, not just as a toy experiment.

Book - Deep Learning in Python - François Chollet

This book could have been named Machine Learning and Deep Learning with Keras. It is very practical and a good complement to my Deep Learning Specialization. It allowed me to create models with TensorFlow/Keras for the first time.

A lot of articles, Jupyter notebooks and courses will show you the basics of training a model. Once a model is trained in your Jupyter notebook, you may get a dopamine rush from the impression of gained knowledge.

But in reality this is only skin deep.

Deep learning (pun intended) only comes when you try to create and implement a model for a real application.

Practical Experience and Projects

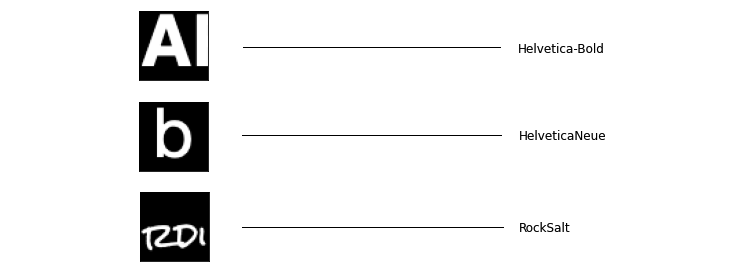

Project - Font Recognition

Having worked a lot with fonts in my apps A+ Signature and Mix on Pix, I decided to create a model to recognize fonts from images. I estimated that the project workflow would involve:

- Taking a picture of text with a phone.

- Extracting the text from the image.

- Normalizing the image for color, shade and camera angle.

- Creating a Deep Learning model to identify the closest font.

- Displaying the closest font and its name to the user, possibly with a link to download or purchase it.

For the Text Extraction, I experimented with models from Apple and Google, finding Google’s model superior but I realized that there would be big challenges with normalization and font licensing if I wanted to show the actual closest font.

Eventually, I created a simpler project, generating images with texts, which can be found in my GitHub repo font-from-image.

Through this, I learned:

- Extensive use of TensorFlow.

- The importance of all the code around the model for data preparation and application integration.

Another important realization was that without using Machine Learning, I wouldn’t have known how to attack this problem programmatically.

Project - Building a Custom ML Machine

In December 2018, I built a Linux machine with an Nvidia RTX 2080 graphic card, which significantly reduced the training times.

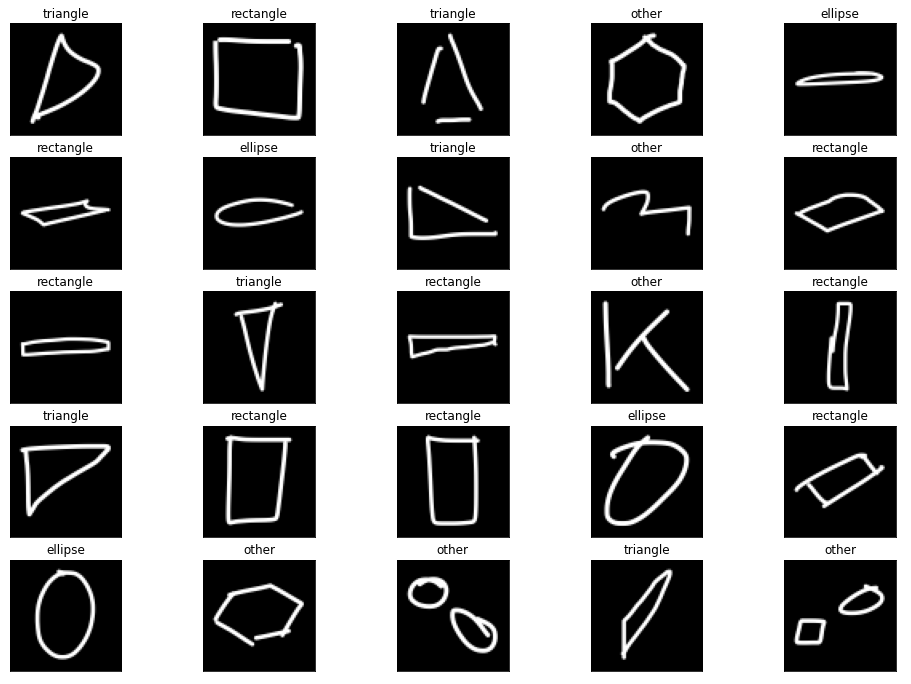

Project - Auto-Shapes models for Mix on Pix

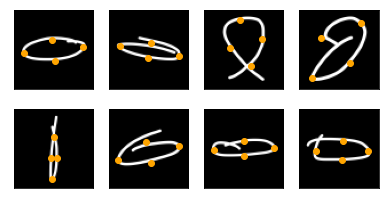

In Mix on Pix, users can draw shapes to annotate pictures. I aimed to create an Auto-Shapes feature where my model would automatically determine the shape the user intended in its drawing. The project involved:

- Creating data for shapes without requiring near-perfect drawings.

- Developing labeling tools.

- Using Data Augmentation with rotation.

- Ensuring consistent bias towards shape and vertices position. Yes, sometimes bias is a positive thing.

More details can be found in my GitHub repo hand-drawn-shapes-dataset.

Key learnings included:

- The difficult and time consuming task of creating and labeling data at scale.

- The need to create my own tools for data augmentation for vertices as the label value changes during the Augmentation

Adding more data progressively and trying to add Data Augmentation progressively allowed me to really see the models go from "I don't know" to "now I get it". It was very similar to seeing my son learn a concept that he could not grasp the day before.

Continued Learning and Specializations

I continued to improve my knowledge through various specializations:

DeepLearning.AI - TensorFlow Developer Specialization (Oct-Nov 2020)

Good to get familiar with all the basics of TensorFlow.

DeepLearning.AI - Natural Language Processing Specialization (Dec 2020-Jan 2021)

Good to get classic NLP approaches and new approaches like Transformers (the T in GPT).

DeepLearning.AI - TensorFlow: Advanced Techniques Specialization (Feb-Mar 2021)

Really interesting to go beyond simple models.

DeepLearning.AI - AI for Medicine Specialization (Apr-May 2021)

Good to get classic approaches and learn about the U-Net architecture.

Columbia University - Decision Making and Reinforcement Learning (June-July 2023)

It got me started in Reinforcement Learning, but I could have skip this one.

University of Alberta - Reinforcement Learning Specialization (July-Sept 2023)

Much better than I expected. I really liked that they "force" you to read parts of the Reinforcement Learning book, by Barto and Sutton.

Advice for Beginners

If I were to start my journey today, I would begin with these courses:

Machine Learning Specialization by Andrew Ng

This revamped version of the course I took in 2018 provides a solid foundation.

Deep Learning Specialization by Andrew Ng

The updated version of the 2017 specialization covers all the essential aspects of Deep Learning.

These courses are beneficial for building a strong base in Machine Learning and Deep Learning.

Conclusion

My Machine Learning journey has been filled with learning, experimentation, and growth. From understanding the basics to tackling more complex projects, each step has been rewarding.

I encourage anyone interested in Machine Learning to take these recommended courses or other similar courses. Although Generative AI seems to take all the space these days, it is best to get the basics right.

January 2026 update

In the last 2 years, I took additional courses and wrote a bit about ML.

Courses:

- Many LLM/MCP related short courses from DeepLearning.ai

- The new Machine Learning Specialization by Andrew Ng, which I wrote about here

- The PyTorch for Deep Learning Professional Certificate, since Pytorch is used more than TensorFlow for LLMs

- The Fine-tuning and Reinforcement Learning for LLMs: Intro to Post-Training

Blog Posts:

Initially, I found LLMs interesting as a user, but not that much as an ML practitioner because of their humongous size.

Lately, I find that fine-tuning Small Language Models (SLM) can have a lot of value for specialized chatbots and for Agentic AI.

Actually, the world of AI is moving fast, so it is pretty exciting...